One-shot Imitation Learning via Interaction Warping

What if a robot could learn to manipulate an object it has never seen before, from just a single demonstration? This paper introduces Interaction Warping — a method that makes this possible by decoupling what a robot learns about actions from the specific objects it trains on.

The Problem

Teaching robots to manipulate objects is hard. Traditional approaches require hundreds or thousands of demonstrations, and even then, robots struggle to generalize to objects they haven't been trained on. Pick up a mug in training, and the robot might fail when faced with a cup that's slightly different. This brittleness makes real-world deployment impractical.

The core challenge is that most imitation learning methods entangle the action (how to grasp) with the specific object geometry (the exact shape of this mug). Change the object, and the learned action breaks.

Our Approach: Interaction Warping

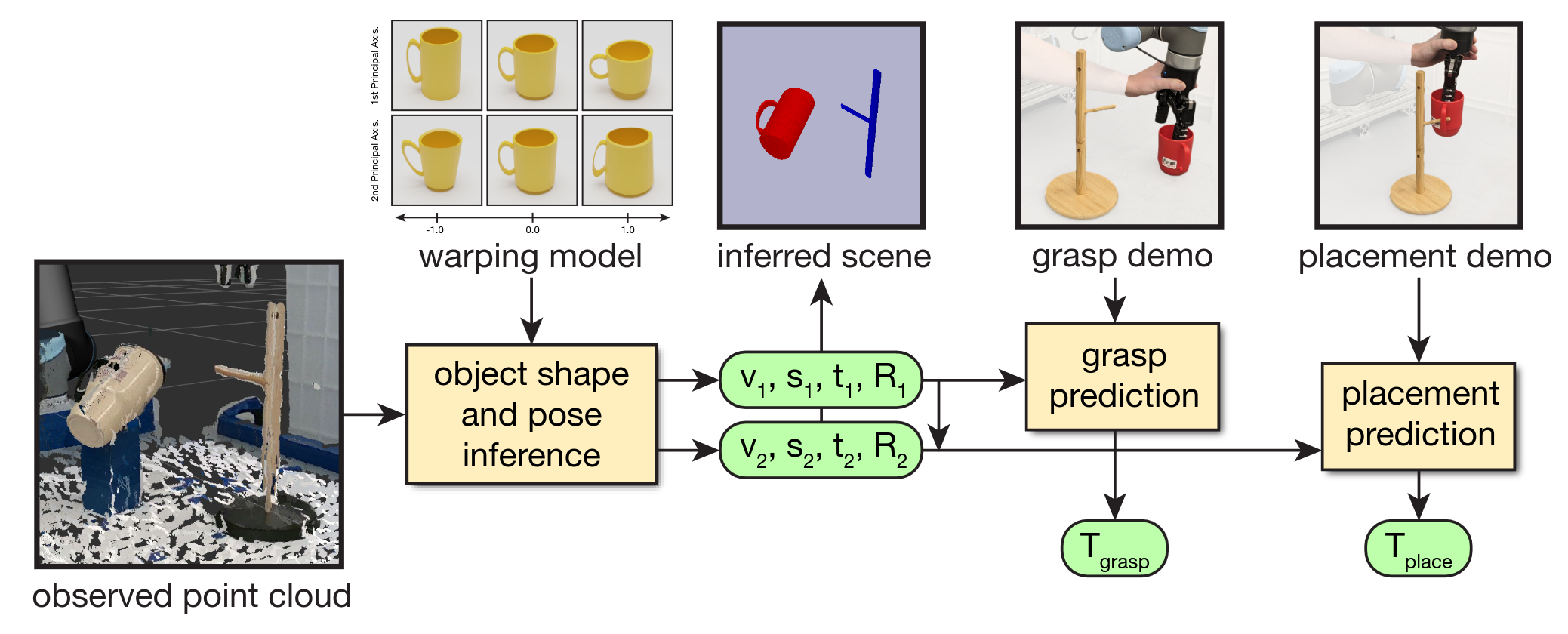

We tackled this by separating the "what to do" from the "what it looks like." Our method, Interaction Warping, learns SE(3) manipulation policies from a single demonstration by encoding actions as spatial keypoints on object surfaces, then warping those keypoints when the object shape changes.

Segment & Perceive

Using cutting-edge segmentation models (including Meta's Segment Anything), we extract clean point clouds of target objects from cluttered real-world scenes.

Warp the Shape

We fit deformable models that align point clouds across different object instances within the same category, learning the variation patterns of object shapes.

Transfer the Action

Manipulation actions encoded as keypoints automatically deform alongside the warped geometry, enabling the robot to execute the same skill on entirely new objects.

Why This Matters

Most robots in factories today are programmed for one specific task with one specific object. If a warehouse changes its packaging or a factory introduces a new part, everything needs to be reprogrammed. Our work moves toward robots that can adapt on the fly — see a new object, understand its shape, and figure out how to interact with it based on prior experience with similar objects.

The ability to learn from a single demonstration is particularly powerful. It means a human operator could show a robot how to perform a task once, and the robot could generalize that skill across variations of the same task. This dramatically reduces the time and expertise needed to deploy robotic manipulation systems.

Results

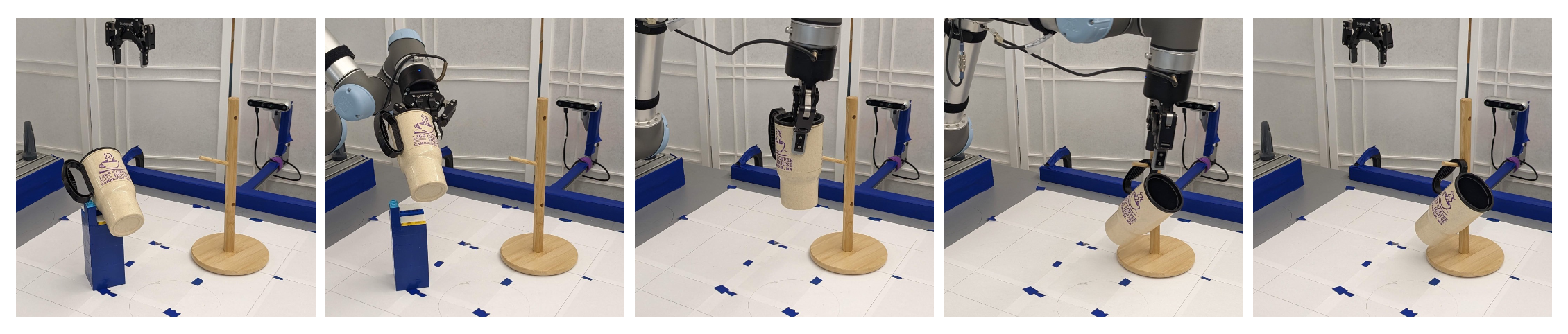

We validated Interaction Warping on both simulated and real-world object rearrangement tasks. The method successfully:

- Predicted 3D object geometry and grasping strategies in unconstrained environments

- Generalized manipulation behaviors from a single demonstration to novel objects

- Executed SE(3) pick-and-place, stacking, and rearrangement tasks in the real world

- Outperformed baseline one-shot methods on shape generalization benchmarks

My Contribution

My role focused on the perception pipeline — integrating state-of-the-art segmentation models to allow the robot to perceive and understand objects in real-world scenes. This involved bridging the gap between foundation vision models like Segment Anything and the downstream manipulation policy, ensuring the robot could reliably extract clean object representations from noisy, cluttered environments.

This work was done during my time at Northeastern University's Robot Learning Lab, collaborating with researchers from Brown University, Microsoft Research, Google DeepMind, and the University of Amsterdam.

From Research to Product

This research experience shaped how I think about AI products today. At SOLIDWORKS, I apply the same principle — bridging cutting-edge AI research with practical applications that engineers use daily. The question is always the same: how do you take a powerful AI capability and make it useful, reliable, and accessible to non-experts?

The segmentation and perception work I did for this paper directly informs my thinking about computer vision features in CAD — how AI can understand 3D geometry, recognize patterns, and augment human design workflows.